Getting Started

Use LangDB’s Python SDK to generate completions, monitor API usage, retrieve analytics, and evaluate LLM workflows efficiently.

Key Features

Quick Start (Chat Completions)

pip install pylangdb[client]from pylangdb.client import LangDb

# Initialize LangDB client

client = LangDb(api_key="your_api_key", project_id="your_project_id")

# Simple chat completion

resp = client.chat.completions.create(

model="openai/gpt-4o-mini",

messages=[{"role": "user", "content": "Hello!"}]

)

print(resp.choices[0].message.content)Agent Tracing Quick Start

Supported Frameworks (Tracing)

Framework

Installation

Import Pattern

Key Features

How It Works

Installation

Configuration

Client Usage (Chat Completions)

Initialize LangDb Client

Chat Completions

Thread Operations

Analytics

Evaluate Multiple Threads

List Available Models

Framework-Specific Examples (Tracing)

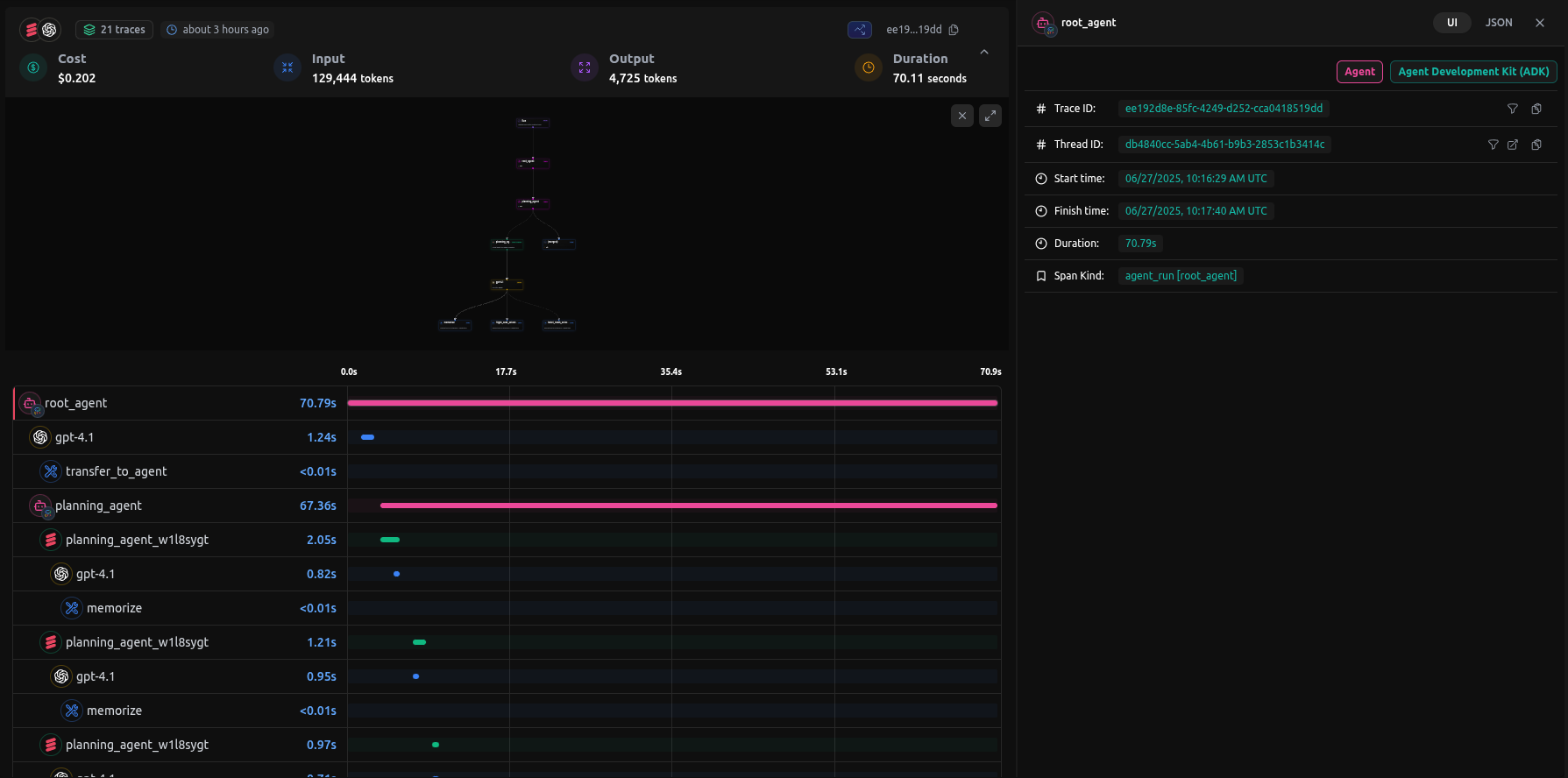

Google ADK

OpenAI

LangChain

CrewAI

Agno

Advanced Configuration

Environment Variables

Variable

Description

Default

Custom Configuration

Technical Details

Session and Thread Management

Distributed Tracing

API Reference

Initialization Functions

Troubleshooting

Common Issues

Last updated

Was this helpful?