Getting Started

Use LangDB’s Python SDK to generate completions, monitor API usage, retrieve analytics, and evaluate LLM workflows efficiently.

Key Features

LangDB exposes two complementary capabilities:

Chat Completions Client – Call LLMs using the

LangDbPython client. This works as a drop-in replacement foropenai.ChatCompletionwhile adding automatic usage, cost and latency reporting.Agent Tracing – Instrument your existing AI framework (ADK, LangChain, CrewAI, etc.) with a single

init()call. All calls are routed through the LangDB collector and are enriched with additional metadata regarding the framework is visible on the LangDB dashboard.

Quick Start (Chat Completions)

pip install pylangdb[client]from pylangdb.client import LangDb

# Initialize LangDB client

client = LangDb(api_key="your_api_key", project_id="your_project_id")

# Simple chat completion

resp = client.chat.completions.create(

model="openai/gpt-4o-mini",

messages=[{"role": "user", "content": "Hello!"}]

)

print(resp.choices[0].message.content)Agent Tracing Quick Start

Note: Always initialize LangDB before importing any framework-specific classes to ensure proper instrumentation.

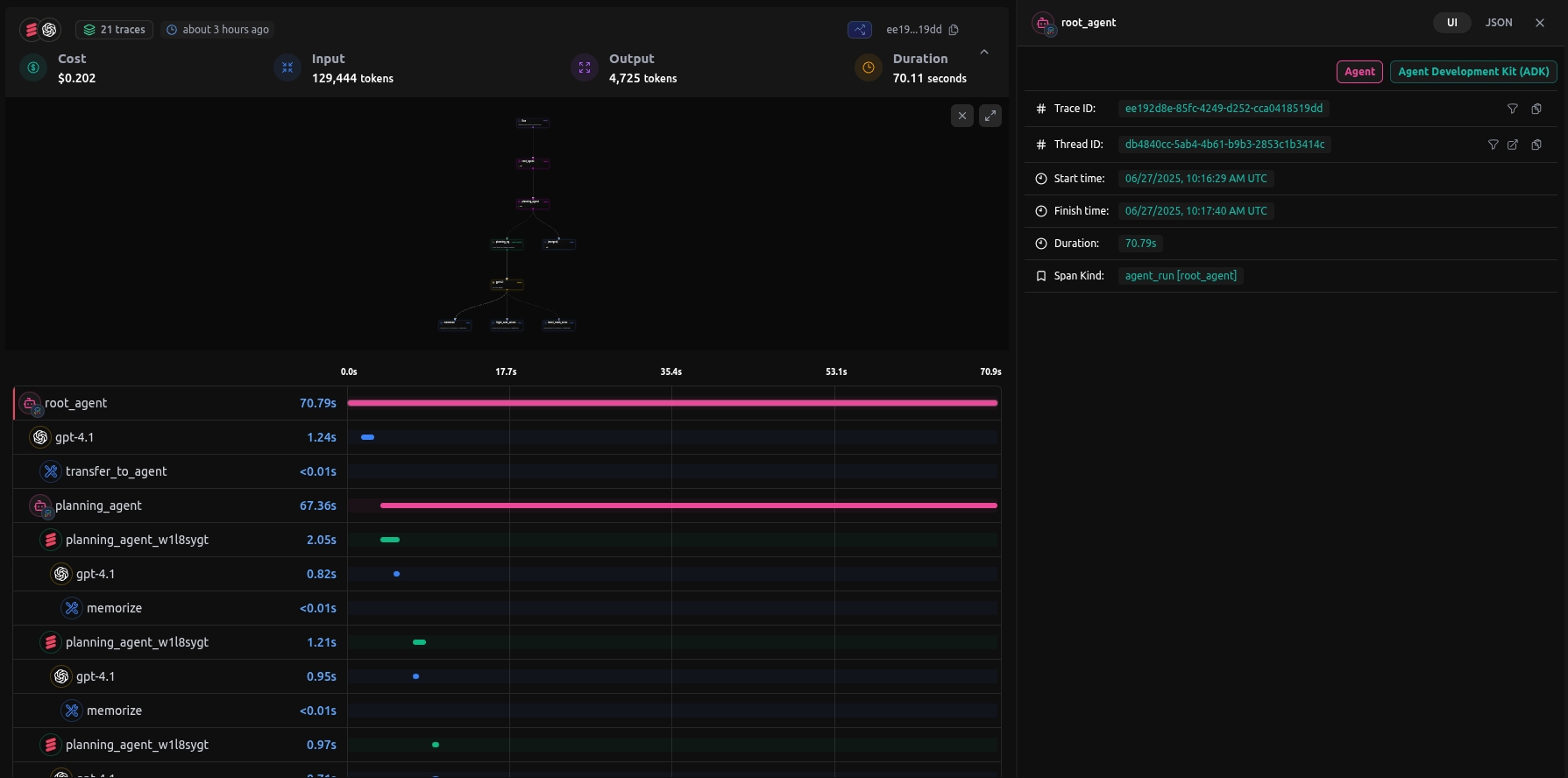

Example Trace Screenshot

Supported Frameworks (Tracing)

Google ADK

pip install pylangdb[adk]

from pylangdb.adk import init

Automatic sub-agent discovery

OpenAI

pip install pylangdb[openai]

from pylangdb.openai import init

Custom model provider support and Run Tracing

LangChain

pip install pylangdb[langchain]

from pylangdb.langchain import init

Automatic chain tracing

CrewAI

pip install pylangdb[crewai]

from pylangdb.crewai import init

Multi-agent crew tracing

Agno

pip install pylangdb[agno]

from pylangdb.agno import init

Tool usage tracing, model interactions

How It Works

LangDB uses intelligent monkey patching to instrument your AI frameworks at runtime:

Installation

Configuration

Set your credentials (or pass them directly to the init() function):

Client Usage (Chat Completions)

Initialize LangDb Client

Chat Completions

Thread Operations

Get Messages

Retrieve messages from a specific thread:

Get Thread Cost

Get cost and token usage information for a thread:

Analytics

Get analytics data for specific tags:

Evaluate Multiple Threads

List Available Models

Framework-Specific Examples (Tracing)

Google ADK

OpenAI

LangChain

CrewAI

Agno

Advanced Configuration

Environment Variables

LANGDB_API_KEY

Your LangDB API key

Required

LANGDB_PROJECT_ID

Your LangDB project ID

Required

LANGDB_API_BASE_URL

LangDB API base URL

https://api.us-east-1.langdb.ai

LANGDB_TRACING_BASE_URL

Tracing collector endpoint

https://api.us-east-1.langdb.ai:4317

LANGDB_TRACING

Enable/disable tracing

true

LANGDB_TRACING_EXPORTERS

Comma-separated list of exporters

otlp, console

Custom Configuration

All init() functions accept the same optional parameters:

Technical Details

Session and Thread Management

Thread ID: Maintains consistent session identifiers across agent calls

Run ID: Unique identifier for each execution trace

Invocation Tracking: Tracks the sequence of agent invocations

State Persistence: Maintains context across callbacks and sub-agent interactions

Distributed Tracing

OpenTelemetry Integration: Uses OpenTelemetry for standardized tracing

Attribute Propagation: Automatically propagates LangDB-specific attributes

Span Correlation: Links related spans across different agents and frameworks

Custom Exporters: Supports multiple export formats (OTLP, Console)

API Reference

Initialization Functions

Each framework has a simple init() function that handles all necessary setup:

langdb.adk.init(): Patches Google ADK Agent class with LangDB callbackslangdb.openai.init(): Initializes OpenAI agents tracinglangdb.langchain.init(): Initializes LangChain tracinglangdb.crewai.init(): Initializes CrewAI tracinglangdb.agno.init(): Initializes Agno tracing

All init functions accept optional parameters for custom configuration (collector_endpoint, api_key, project_id)

Troubleshooting

Common Issues

Missing API Key: Ensure

LANGDB_API_KEYandLANGDB_PROJECT_IDare setTracing Not Working: Check that initialization functions are called before creating agents

Network Issues: Verify collector endpoint is accessible

Framework Conflicts: Initialize LangDB integration before other instrumentation

Last updated

Was this helpful?